on Posted on Reading Time: 5 minutes

As the result of the growth in 5G deployment, the telecommunications industry has been paying more attention to edge computing as a potential value-add service driving new revenues for both the telcos and cloud providers. In this post, Wenyu Shen, Senior Manager & Principal Architect of NTT Communications discusses the growth of production deployment of edge computing services for the manufacturing industry (Industry 4.0)—in which cutting-edge AI and IoT solutions have been deployed to realize safer and more efficient production, based on improved automatic machine control, product and equipment inspection. He predicts why we can expect to see this edge computing trend appear in many other market segments, starting with retail, healthcare, connected cars, and smart cities and how MEF’s open standard business and operational LSO APIs, together with MEF edge computing standards, are opening the way to multi-provider edge computing services on a global scale.

Challenges

The core concept of edge computing is provisioning cloud infrastructure closer to end users, as compared with today’s traditional cloud offerings; for example, the locations could be a data center, a bureau office building, or even on-premises. For the edge computing service provider, one of the challenges of making compute available closer to the end user is that high levels of capital investment are required to provide “true” edge computing service nationwide and even more so at a global scale. Such scale is typically beyond the means of a single service provider.

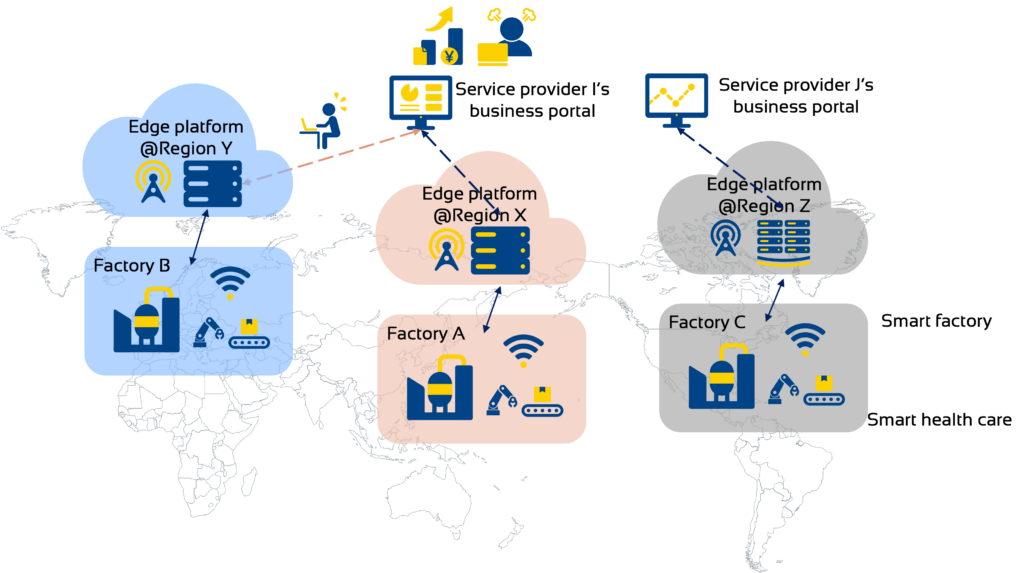

Figure 1: Challenges for edge computing consumers and service providers.

These challenges are illustrated in the example in figure 1. A manufacturer operating factory A has deployed an AI solution for automatic plant operation on the edge computing platform that is provided by Service Provider I in region X. After proving its effectiveness, the manufacturer decides to deploy the same solution for factory B in region Y. However, Service Provider I doesn’t have its own edge computing footprint in region Y. Fortunately, a partner of Service Provider I is providing a similar edge computing service, but it utilizes a different technology from another vendor.

Expecting increasing business opportunity in region Y, Service Provider I decides to integrate its customer portal and the Business Support System/Operations Support System (BSS/OSS) with its partner’s edge platform to provide a suitable customer experience for the manufacturer. Next, the manufacturer decides to further expand the AI solution for its factory C in region Z, at which neither Service Provider I nor any of its partners have an edge computing presence. The manufacturer therefore needs to engage with Service Provider J directly, even though Service Provider J has a totally different customer portal and BSS.

In this simple example, we can already see the following business friction:

- The manufacturer has to engage with two edge computing service providers—I and J—instead of just its preferred Service Provider I to cover regions X and Z.

- Service Provider I has to develop costly BSS/OSS integration with a partner for region Y.

The result is more customer-facing interfaces with more complex operational procedures, increased integration costs, delayed availability, and lost business in regions where it doesn’t have an edge computing presence.

Solutions

MEF’s open standard business and operational LSO APIs, together with MEF edge computing standards, can eliminate this scaling- and business-friction problem in the emerging world of edge computing. This approach applies the same successful method being used to streamline business and operational interactions between service providers for Carrier Ethernet and Internet Access to such interactions for edge computing. In other words, by using the same LSO APIs, but with an edge computing product payload (data schema), we can overcome the challenges described in the first part of this blog.

The Collaboration Blueprint

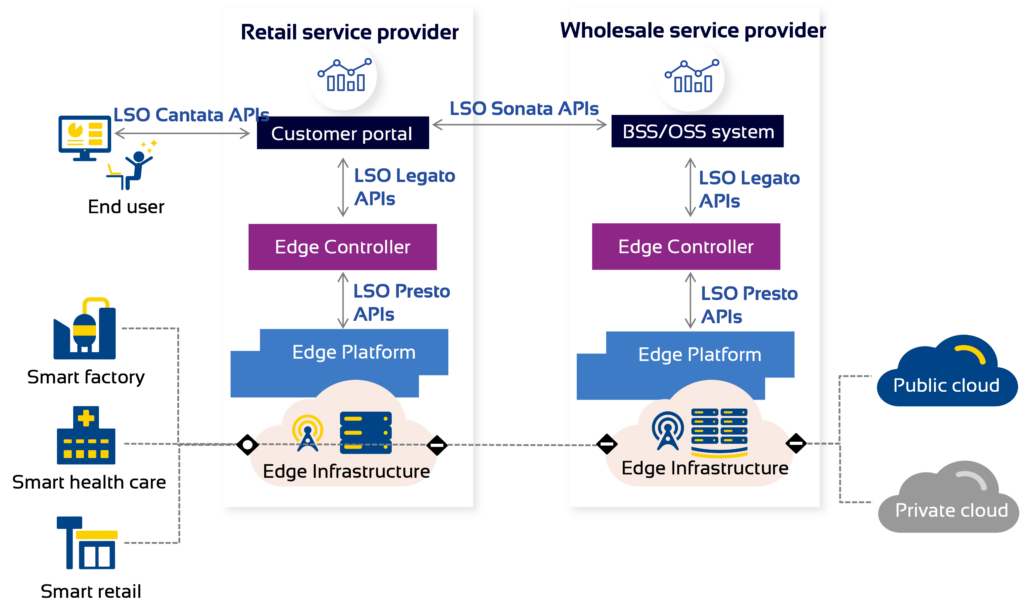

In figure 2, we explore this solution further by illustrating a collaboration blueprint for edge computing service providers, based on the MEF LSO framework.

Figure 2: The collaboration model among multiple edge service providers, based on MEF LSO framework

In this blueprint, we assume that an end user consumes the retail service provider’s edge resources and services via LSO Cantata APIs. Inside the edge computing service provider, the customer portal or the BSS/OSS system will send the corresponding translated instructions to the edge controller via LSO Legato APIs and then finally to the edge platform via LSO Presto APIs, based on the service orders from the end user.

As we showed above, it is possible that there are multiple edge platforms, based on different vendor products, even within a single service provider domain due to many factors, such as its organizational structure, so LSO Presto APIs—which have the potential to absorb the differences between the vendor-specific interfaces—are central to reducing any integration costs.

Next, when an end user requests edge computing resources that are within scope for the retail edge computing service provider, it can then work to procure the requested resources from a wholesale edge computing service provider via LSO Sonata APIs. If successful, those wholesale edge computing resources will be reserved and provided back to the retail edge computing service provider. LSO Sonata APIs make the transaction of the edge computing resources between retail and wholesale edge computing service providers possible.

Edge Computing Standards

MEF’s working draft standard MEF W132 Edge Computing IaaS Attributes, which is currently available on the MEF Wiki, is the starting point of our initiative, as it defines the basic building blocks of the of IaaS and its attributes. Once MEF W132 is finalized, the MEF member contributors should derive from the attributes the MEF Service Model, which will include the data model and LSO service payload that can be used in the LSO Presto and LSO Legato APIs within the edge computing service provider, as discussed earlier.

In parallel, we see a need to pilot a product payload for edge computing services, based on MEF W132 and edge computing service provider expertise, which can be used in LSO Cantata and LSO Sonata APIs. Evolving such a product payload for LSO APIs in a Plan-Do-Check-Act (PDCA) cycle will not only strengthen the MEF standardization activities in this area, but also accelerate the production and business processes inside the edge computing service providers to enable global-scale edge computing services based on the multi-provider collaboration model.

Learn More

We invite current and potential edge computing service providers in MEF, together with other interested members, to join NTT Communications and NEC/Netcracker in the MEF Showcase project, Hot Mongoose, which is planned to run through at least until November 2023.

Hot Mongoose will pilot the use of LSO APIs together with a MEF W132-based edge computing product payload to demonstrate how multiple edge computing service providers can dynamically and collectively deliver a single IaaS customer global edge computing with minimal latency.

MEF Showcase 2023

A hallmark of industry innovation, the MEF Showcase program provides the seeds of new, collaborative work within MEF, as well as validating the real-world use cases behind MEF standards and the broader industry.

Within the program, MEF member service providers and technology suppliers collaborate to develop and demonstrate MEF 3.0-based use cases that validate, evolve, and inspire the work of MEF in the areas of LSO APIs, LSO Payloads and LSO Blockchain.

Stay tuned for more details on MEF Showcase projects in future posts.